In this article, you will be introduced to the basics of maintaining quality. This involves quality assurance, quality control, and metrology. We use quality assurance to gain confidence that quality requirements will be fulfilled. Quality control is used to check that requirements have been fulfilled. This is a subtle difference, and in practice, the terms are sometimes used interchangeably.

Metrology is the science of measurement. It is how we ensure that we can confidently compare the results of measurements made all over the world. These principles can apply to products or services, but I’m going to focus on manufacturing and how these three fundamental concepts relate to each other in that context. I have therefore avoided the details of specific methods, and I don’t get into any of the maths. I’ll save that for a later article.

Origins of Measurement

|

The Egyptians used standards of measurement, with regular calibrations, to ensure stones would fit together in their great construction projects. But modern quality systems really began during the industrial revolution. Before then, mechanical goods were built by craftsmen who would fettle each part individually to fit into an assembly. This meant each machine, and every part in it, was unique. If a part needed to be replaced then a craftsman would need to fit a new part.

|

|

In the late 18th Century, French arms manufacturers started to make muskets with standard parts. This meant the army could carry spare parts and quickly exchange them for broken ones. These interchangeable parts were still fettled to fit into the assembly, but instead of fitting each part to the individual gun it was fit to a master part.

A few years later, American gun makers started using this method but adapted it to suit their untrained workers. They filed gauges to fit to the master part, workers would set jigs and production machines using the gauges, and also use the gauges to check the parts. This enabled a row of machines, each carrying out a single operation with an unskilled operator, to produce accurate parts. The parts could then be quickly assembled into complex machines.

The foundation for modern manufacturing had thus been laid, over 100 years before Ford would apply these ideas to a moving production line.

Calibration, True Value, and Measurement Error

A system of master parts, gauges, and single-use machines worked when an entire product was produced in a single factory. Modern global supply chains need a different system.

Instead of having a physical master part, we have a drawing or a digital CAD model. Specified tolerances ensure the parts will fit together and perform as intended. Rather than every manufacturer coming to a single master part to set their gauges, they have their measurement instruments calibrated. The instruments are then used to set the production machines and to check the parts produced. All quality depends on this calibration process.

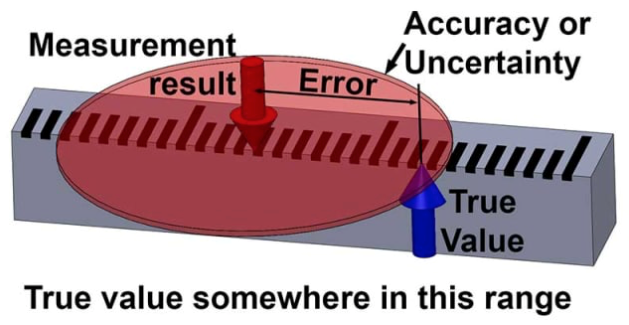

The most important concept to understand is that all measurements have uncertainty. If I asked you to estimate the height of this text you might say, “It’s about 4 mm”. Using the word “about” implies that there is some uncertainty in your estimate.

In fact, we can never know the exact true value of anything, all measurements are actually estimates, and have some uncertainty. The difference between a measurement result and the true value is the measurement error. Since we can’t know the true value, we also can’t know the error: these are unknowable quantities.

All we can quantify about the world around us is the results of measurements, and these always have some uncertainty, even if this uncertainty is very small.

If you were to estimate the height of this text as “about 4 mm, give or take 1 mm” then you have now assigned some limits to your uncertainty. But you still can’t be 100% sure that it’s true.

You might have some level of confidence, say 95%, that it is true. If you were to increase the limits, to say, give or take 2 mm, then your confidence would increase, perhaps to 99%. So the uncertainty gives some bounds within which we have a level of confidence that the true value lies.

OK, philosophy class over!

Uncertainty and Quality

Once we have determined the uncertainty (or ‘accuracy’) of a measurement we can then apply this to decide whether a part conforms to a specified tolerance. For example, let’s say that a part is specified to be 100 mm +/- 1 mm. We measure it and get a result of 100.87 mm.

Is the part in specification?

The simple answer is: We don’t know, maybe it is, but maybe there was an error in our measurement and actually the part is more than 101 mm. Maybe there was an even bigger error and the parts is actually less than 99 mm!

Unless we know what the uncertainty of the measurement is, we have no idea how confident we can be that the part is within specification. Let’s suppose that the uncertainty of the measurement was given so that the measurement result is 100.87 mm +/- 0.1 mm at 95% confidence. Now we can say with better than 95% confidence that the part is within specification. So understanding and quantifying the uncertainty of measurements is critical to maintaining quality.

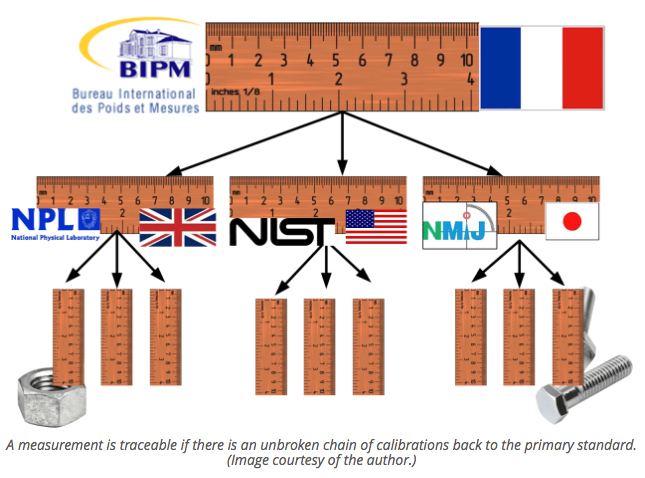

Now, let’s consider calibration and the associated concept of traceability. This is a fundamental aspect of uncertainty. A calibration is a comparison with a reference, and the uncertainty of this comparison must always be included, for reasons explained below.

A traceable measurement is one which has an unbroken chain of calibrations going all the way back to the primary standard. In the case of length measurements, the primary standard is the definition of the meter; the distance traveled by light in a vacuum in 1/299,792,458 seconds, as realized by the International Bureau of Weights and Measures (BIPM) in Paris.

Since the 1930s, the inch has been defined as 25.4 mm and is therefore also traceable to the same meter standard. All measurements must be traceable back to the same standard to ensure that parts manufactured in different countries will fit together.

Uncertainty and Error

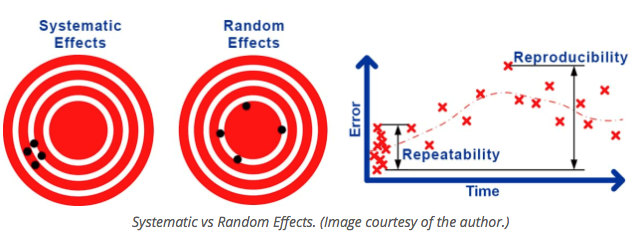

The uncertainty of measurements arises from different sources. Some of these will lead to a consistent error, or bias, in the result.

For example, the unknown error present when an instrument was calibrated will lead to a consistent error whenever it is used. This type of effect is known as a systematic uncertainty leading to a systematic error. Other sources will lead to errors which change randomly each time a measurement is made.

For example, turbulence in the air may cause small, randomly changing perturbations of laser measurements, mechanical play and alignment may cause randomly changing error in mechanical measurements. This type of effect is known as a random uncertainty leading to a random error.

It is conventional to divide random uncertainty into repeatability, the random uncertainty of results under the same conditions, and reproducibility, the random uncertainty under changed conditions.

Of course, the conditions can never be exactly the same or completely different so the distinction is somewhat vague. The types of conditions which might be changed are making the measurement at a different time, with a different operator, a different instrument, using a different calibration, and in a different environment.

There are two widely used methods to quantify the uncertainty of a measurement. Calibration laboratories and scientific institutions normally carry out Uncertainty Evaluation according to the Guide to the Expression of Uncertainty in Measurement (GUM).

The GUM method involves first considering all of the influences which might affect the measurement result. A mathematical model must then be determined, giving the measurement result as a function of these influence quantities. By considering the uncertainty in each input quantity and applying the ‘Law of Propagation of Uncertainty’, an estimate for the combined uncertainty of the measurement can be calculated.

The GUM approach is sometimes described as bottom-up, since it starts with a consideration of each individual influence. Each influence is normally listed in a table called an uncertainty budget, which is used to calculate the combined uncertainty.